2014-12-15 09:30:59+0800 [scrapy] INFO: Scrapy 0.24.4 started (bot: tutorial)

2014-12-15 09:30:59+0800 [scrapy] INFO: Optional features available: ssl, http11

2014-12-15 09:30:59+0800 [scrapy] INFO: Overridden settings: {'NEWSPIDER_MODULE': 'tutorial.spiders', 'SPIDER_MODULES': ['tutorial.spiders'], 'BOT_NAME': 'tutorial'}

2014-12-15 09:30:59+0800 [scrapy] INFO: Enabled extensions: LogStats, TelnetConsole, CloseSpider, WebService, CoreStats, SpiderState

2014-12-15 09:30:59+0800 [scrapy] INFO: Enabled downloader middlewares: HttpAuthMiddleware, DownloadTimeoutMiddleware, UserAgentMiddleware, RetryMiddleware, DefaultHeadersMiddleware, MetaRefreshMiddleware, HttpCompressionMiddleware, RedirectMiddleware, CookiesMiddleware, ChunkedTransferMiddleware, DownloaderStats

2014-12-15 09:30:59+0800 [scrapy] INFO: Enabled spider middlewares: HttpErrorMiddleware, OffsiteMiddleware, RefererMiddleware, UrlLengthMiddleware, DepthMiddleware

2014-12-15 09:30:59+0800 [scrapy] INFO: Enabled item pipelines:

2014-12-15 09:30:59+0800 [dmoz] INFO: Spider opened

2014-12-15 09:30:59+0800 [dmoz] INFO: Crawled 0 pages (at 0 pages/min), scraped 0 items (at 0 items/min)

2014-12-15 09:30:59+0800 [scrapy] DEBUG: Telnet console listening on 127.0.0.1:6023

2014-12-15 09:30:59+0800 [scrapy] DEBUG: Web service listening on 127.0.0.1:6080

2014-12-15 09:31:00+0800 [dmoz] DEBUG: Crawled (200) (referer: None)

2014-12-15 09:31:00+0800 [dmoz] DEBUG: Crawled (200) (referer: None)

2014-12-15 09:31:00+0800 [dmoz] INFO: Closing spider (finished)

2014-12-15 09:31:00+0800 [dmoz] INFO: Dumping Scrapy stats:

{'downloader/request_bytes': 516,

'downloader/request_count': 2,

'downloader/request_method_count/GET': 2,

'downloader/response_bytes': 16338,

'downloader/response_count': 2,

'downloader/response_status_count/200': 2,

'finish_reason': 'finished',

'finish_time': datetime.datetime(2014, 12, 15, 1, 31, 0, 666214),

'log_count/DEBUG': 4,

'log_count/INFO': 7,

'response_received_count': 2,

'scheduler/dequeued': 2,

'scheduler/dequeued/memory': 2,

'scheduler/enqueued': 2,

'scheduler/enqueued/memory': 2,

'start_time': datetime.datetime(2014, 12, 15, 1, 30, 59, 533207)}

2014-12-15 09:31:00+0800 [dmoz] INFO: Spider closed (finished)

3.4. 提取Items

3.4.1. 介绍Selector

从网页中提取数据有很多方法。Scrapy使用了一种基于 XPath 或者 CSS 表达式机制: Scrapy Selectors

出XPath表达式的例子及对应的含义:

- /html/head/title: 选择HTML文档中 标签内的 元素</li>

<li>/html/head/title/text(): 选择 <title> 元素内的文本</li>

<li>//td: 选择所有的 <td> 元素</li>

<li>//div[@class="mine"]: 选择所有具有class="mine" 属性的 div 元素</li>

</ul>

<p>等多强大的功能使用可以查看XPath tutorial</p>

<p>为了方便使用 XPaths,Scrapy 提供 Selector 类, 有四种方法 :</p>

<ul>

<li>xpath():返回selectors列表, 每一个selector表示一个xpath参数表达式选择的节点.</li>

<li>css() : 返回selectors列表, 每一个selector表示CSS参数表达式选择的节点</li>

<li>extract():返回一个unicode字符串,该字符串为XPath选择器返回的数据</li>

<li>re(): 返回unicode字符串列表,字符串作为参数由正则表达式提取出来</li>

</ul>

<p><strong>3.4.2. 取出数据<br />

</strong></p>

<ul>

<li>首先使用谷歌浏览器开发者工具, 查看网站源码, 来看自己需要取出的数据形式(这种方法比较麻烦), 更简单的方法是直接对感兴趣的东西右键审查元素, 可以直接查看网站源码</li>

</ul>

<p>在查看网站源码后, 网站信息在第二个<ul>内</p>

<div class="jb51code">

<pre class="brush:py;">

<ul class="directory-url" style="margin-left:0;">

<li>Core Python Programming

- By Wesley J. Chun; Prentice Hall PTR, 2001, ISBN 0130260363. For experienced developers to improve extant skills; professional level examples. Starts by introducing syntax, objects, error handling, functions, classes, built-ins. [Prentice Hall]

<div class="flag"><img src="https://www.gxlcms.com/img/flag.png" alt="[!]" title="report an issue with this listing"></div>

</li>

...省略部分...

</ul>

</script></pre>

</p>

<p>那么就可以通过一下方式进行提取数据</p>

<div class="jb51code">

<pre class="brush:py;">

#通过如下命令选择每个在网站中的 <li> 元素:

sel.xpath('//ul/li')

#网站描述:

sel.xpath('//ul/li/text()').extract()

#网站标题:

sel.xpath('//ul/li/a/text()').extract()

#网站链接:

sel.xpath('//ul/li/a/@href').extract()

</script></pre>

</p>

<p>如前所述,每个 xpath() 调用返回一个 selectors 列表,所以我们可以结合 xpath() 去挖掘更深的节点。我们将会用到这些特性,所以:</p>

<div class="jb51code">

<pre class="brush:py;">

for sel in response.xpath('//ul/li')

title = sel.xpath('a/text()').extract()

link = sel.xpath('a/@href').extract()

desc = sel.xpath('text()').extract()

print title, link, desc

</script></pre>

</p>

<p>在已有的爬虫文件中修改代码</p>

<div class="jb51code">

<pre class="brush:py;">

import scrapy

class DmozSpider(scrapy.Spider):

name = "dmoz"

allowed_domains = ["dmoz.org"]

start_urls = [

"http://www.dmoz.org/Computers/Programming/Languages/Python/Books/",

"http://www.dmoz.org/Computers/Programming/Languages/Python/Resources/"

]

def parse(self, response):

for sel in response.xpath('//ul/li'):

title = sel.xpath('a/text()').extract()

link = sel.xpath('a/@href').extract()

desc = sel.xpath('text()').extract()

print title, link, desc

</script></pre>

</p>

<p><strong>3.4.3. 使用item<br />

</strong>Item对象是自定义的python字典,可以使用标准的字典语法来获取到其每个字段的值(字段即是我们之前用Field赋值的属性)</p>

<div class="jb51code">

<pre class="brush:py;">

>>> item = DmozItem()

>>> item['title'] = 'Example title'

>>> item['title']

'Example title'

</script></pre>

</p>

<p>一般来说,Spider将会将爬取到的数据以 Item 对象返回, 最后修改爬虫类,使用 Item 来保存数据,代码如下</p>

<div class="jb51code">

<pre class="brush:py;">

from scrapy.spider import Spider

from scrapy.selector import Selector

from tutorial.items import DmozItem

class DmozSpider(Spider):

name = "dmoz"

allowed_domains = ["dmoz.org"]

start_urls = [

"http://www.dmoz.org/Computers/Programming/Languages/Python/Books/",

"http://www.dmoz.org/Computers/Programming/Languages/Python/Resources/",

]

def parse(self, response):

sel = Selector(response)

sites = sel.xpath('//ul[@class="directory-url"]/li')

items = []

for site in sites:

item = DmozItem()

item['name'] = site.xpath('a/text()').extract()

item['url'] = site.xpath('a/@href').extract()

item['description'] = site.xpath('text()').re('-\s[^\n]*\\r')

items.append(item)

return items

</script></pre>

</p>

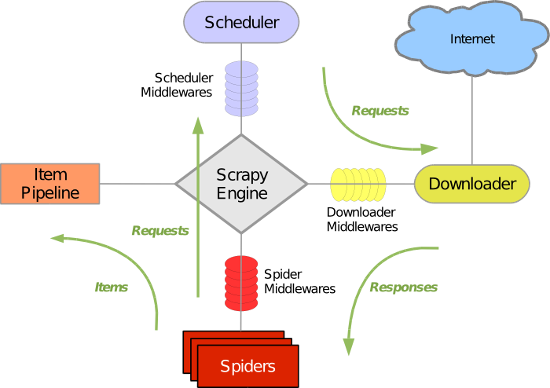

<p><strong>3.5. 使用Item Pipeline<br />

</strong>当Item在Spider中被收集之后,它将会被传递到Item Pipeline,一些组件会按照一定的顺序执行对Item的处理。<br />

每个item pipeline组件(有时称之为ItemPipeline)是实现了简单方法的Python类。他们接收到Item并通过它执行一些行为,同时也决定此Item是否继续通过pipeline,或是被丢弃而不再进行处理。<br />

以下是item pipeline的一些典型应用:</p>

<ul>

<li>清理HTML数据</li>

<li>验证爬取的数据(检查item包含某些字段)</li>

<li>查重(并丢弃)</li>

<li>将爬取结果保存,如保存到数据库、XML、JSON等文件中</li>

</ul>

<p>编写你自己的item pipeline很简单,每个item pipeline组件是一个独立的Python类,同时必须实现以下方法:</p>

<p>(1)process_item(item, spider) #每个item pipeline组件都需要调用该方法,这个方法必须返回一个 Item (或任何继承类)对象,或是抛出 DropItem异常,被丢弃的item将不会被之后的pipeline组件所处理。</p>

<p>#参数:</p>

<p>item: 由 parse 方法返回的 Item 对象(Item对象)</p>

<p>spider: 抓取到这个 Item 对象对应的爬虫对象(Spider对象)</p>

<p>(2)open_spider(spider) #当spider被开启时,这个方法被调用。</p>

<p>#参数:</p>

<p>spider : (Spider object) – 被开启的spider</p>

<p>(3)close_spider(spider) #当spider被关闭时,这个方法被调用,可以再爬虫关闭后进行相应的数据处理。</p>

<p>#参数:</p>

<p>spider : (Spider object) – 被关闭的spider</p>

<p>为JSON文件编写一个items</p>

<div class="jb51code">

<pre class="brush:py;">

from scrapy.exceptions import DropItem

class TutorialPipeline(object):

# put all words in lowercase

words_to_filter = ['politics', 'religion']

def process_item(self, item, spider):

for word in self.words_to_filter:

if word in unicode(item['description']).lower():

raise DropItem("Contains forbidden word: %s" % word)

else:

return item

</script></pre>

</p>

<p>在 settings.py 中设置ITEM_PIPELINES激活item pipeline,其默认为[]</p>

<div class="jb51code">

<pre class="brush:py;">

ITEM_PIPELINES = {'tutorial.pipelines.FilterWordsPipeline': 1}

</script></pre>

</p>

<p><strong>3.6. 存储数据<br />

</strong>使用下面的命令存储为json文件格式</p>

<p>scrapy crawl dmoz -o items.json</p>

<p><strong>4.示例<br />

4.1最简单的spider(默认的Spider)<br />

</strong>用实例属性start_urls中的URL构造Request对象<br />

框架负责执行request<br />

将request返回的response对象传递给parse方法做分析</p>

<p>简化后的源码:</p>

<div class="jb51code">

<pre class="brush:py;">

class Spider(object_ref):

"""Base class for scrapy spiders. All spiders must inherit from this

class.

"""

name = None

def __init__(self, name=None, **kwargs):

if name is not None:

self.name = name

elif not getattr(self, 'name', None):

raise ValueError("%s must have a name" % type(self).__name__)

self.__dict__.update(kwargs)

if not hasattr(self, 'start_urls'):

self.start_urls = []

def start_requests(self):

for url in self.start_urls:

yield self.make_requests_from_url(url)

def make_requests_from_url(self, url):

return Request(url, dont_filter=True)

def parse(self, response):

raise NotImplementedError

BaseSpider = create_deprecated_class('BaseSpider', Spider)

</script></pre>

</p>

<p>一个回调函数返回多个request的例子</p>

<div class="jb51code">

<pre class="brush:py;">

import scrapyfrom myproject.items import MyItemclass MySpider(scrapy.Spider):

name = 'example.com'

allowed_domains = ['example.com']

start_urls = [

'http://www.example.com/1.html',

'http://www.example.com/2.html',

'http://www.example.com/3.html',

]

def parse(self, response):

sel = scrapy.Selector(response)

for h3 in response.xpath('//h3').extract():

yield MyItem(title=h3)

for url in response.xpath('//a/@href').extract():

yield scrapy.Request(url, callback=self.parse)

</script></pre>

</p>

<p>构造一个Request对象只需两个参数: URL和回调函数</p>

<p><strong>4.2CrawlSpider<br />

</strong>通常我们需要在spider中决定:哪些网页上的链接需要跟进, 哪些网页到此为止,无需跟进里面的链接。CrawlSpider为我们提供了有用的抽象——Rule,使这类爬取任务变得简单。你只需在rule中告诉scrapy,哪些是需要跟进的。<br />

回忆一下我们爬行mininova网站的spider.</p>

<div class="jb51code">

<pre class="brush:py;">

class MininovaSpider(CrawlSpider):

name = 'mininova'

allowed_domains = ['mininova.org']

start_urls = ['http://www.mininova.org/yesterday']

rules = [Rule(LinkExtractor(allow=['/tor/\d+']), 'parse_torrent')]

def parse_torrent(self, response):

torrent = TorrentItem()

torrent['url'] = response.url

torrent['name'] = response.xpath("//h1/text()").extract()

torrent['description'] = response.xpath("//div[@id='description']").extract()

torrent['size'] = response.xpath("//div[@id='specifications']/p[2]/text()[2]").extract()

return torrent

</script></pre>

</p>

<p>上面代码中 rules的含义是:匹配/tor/\d+的URL返回的内容,交给parse_torrent处理,并且不再跟进response上的URL。<br />

官方文档中也有个例子:</p>

<div class="jb51code">

<pre class="brush:py;">

rules = (

# 提取匹配 'category.php' (但不匹配 'subsection.php') 的链接并跟进链接(没有callback意味着follow默认为True)

Rule(LinkExtractor(allow=('category\.php', ), deny=('subsection\.php', ))),

# 提取匹配 'item.php' 的链接并使用spider的parse_item方法进行分析

Rule(LinkExtractor(allow=('item\.php', )), callback='parse_item'),

)

</script></pre>

</p>

<p>除了Spider和CrawlSpider外,还有XMLFeedSpider, CSVFeedSpider, SitemapSpider </div>

<div class="">

<ul class="m-news-opt fix">

<li class="opt-item">

<a href='/python-357124.html' target='_blank'><p>< 上一篇</p><p class="ellipsis">搭建Python的Django框架环境并建立和运行第一个App的教程</p></a>

</li>

<li class="opt-item ta-r">

<a href='/python-357126.html' target='_blank'><p>下一篇 ></p><p class="ellipsis">Python中shutil模块的常用文件操作函数用法示例</p></a>

</li>

</ul>

</div>

</div>

</div>

<div class="g-title fix">

<h2 class="title-txt">人气教程排行</h2>

</div>

<div class="m-rank u-dashed mb40">

<ul>

<li class="rank-item">

<a href="/python-361871.html" title='对Python2.7pandas中的read_excel详解' class="item-name ellipsis" target="_blank">

<span class="g-art-count fr">384次</span>

<span class="g-sort-num top">1</span>

对Python2.7pandas中的read_excel详解 </a>

</li> <li class="rank-item">

<a href="/python-357851.html" title='Python实现定时弹窗提醒' class="item-name ellipsis" target="_blank">

<span class="g-art-count fr">383次</span>

<span class="g-sort-num second">2</span>

Python实现定时弹窗提醒 </a>

</li> <li class="rank-item">

<a href="/python-359898.html" title='python爬虫入门(3)--利用requests构建知乎API' class="item-name ellipsis" target="_blank">

<span class="g-art-count fr">383次</span>

<span class="g-sort-num third">3</span>

python爬虫入门(3)--利用requests构建知乎API </a>

</li> <li class="rank-item">

<a href="/python-361328.html" title='python如何爬取搜狗微信公众号文章永久链接的思路解析' class="item-name ellipsis" target="_blank">

<span class="g-art-count fr">382次</span>

<span class="g-sort-num ">4</span>

python如何爬取搜狗微信公众号文章永久链接的思路解析 </a>

</li> <li class="rank-item">

<a href="/python-363639.html" title='python字典的键可以相同吗' class="item-name ellipsis" target="_blank">

<span class="g-art-count fr">381次</span>

<span class="g-sort-num ">5</span>

python字典的键可以相同吗 </a>

</li> <li class="rank-item">

<a href="/python-462846.html" title='python是一种面向什么的语言?' class="item-name ellipsis" target="_blank">

<span class="g-art-count fr">381次</span>

<span class="g-sort-num ">6</span>

python是一种面向什么的语言? </a>

</li> <li class="rank-item">

<a href="/python-355903.html" title='python通过pil为png图片填充上背景颜色的方法' class="item-name ellipsis" target="_blank">

<span class="g-art-count fr">381次</span>

<span class="g-sort-num ">7</span>

python通过pil为png图片填充上背景颜色的方法 </a>

</li> <li class="rank-item">

<a href="/python-364233.html" title='python语言的编程模式有什么' class="item-name ellipsis" target="_blank">

<span class="g-art-count fr">380次</span>

<span class="g-sort-num ">8</span>

python语言的编程模式有什么 </a>

</li> <li class="rank-item">

<a href="/python-353438.html" title='使用python获取进程pid号的方法' class="item-name ellipsis" target="_blank">

<span class="g-art-count fr">380次</span>

<span class="g-sort-num ">9</span>

使用python获取进程pid号的方法 </a>

</li> <li class="rank-item">

<a href="/python-362615.html" title='Python中如何解决无限循环的问题' class="item-name ellipsis" target="_blank">

<span class="g-art-count fr">380次</span>

<span class="g-sort-num ">10</span>

Python中如何解决无限循环的问题 </a>

</li> <li class="rank-item">

<a href="/python-466149.html" title='怎么解决pip不是内部或外部命令' class="item-name ellipsis" target="_blank">

<span class="g-art-count fr">378次</span>

<span class="g-sort-num ">11</span>

怎么解决pip不是内部或外部命令 </a>

</li> <li class="rank-item">

<a href="/python-374795.html" title='python中def是什么意思' class="item-name ellipsis" target="_blank">

<span class="g-art-count fr">378次</span>

<span class="g-sort-num ">12</span>

python中def是什么意思 </a>

</li> <li class="rank-item">

<a href="/python-361381.html" title='对numpy中数组元素的统一赋值实例' class="item-name ellipsis" target="_blank">

<span class="g-art-count fr">376次</span>

<span class="g-sort-num ">13</span>

对numpy中数组元素的统一赋值实例 </a>

</li> <li class="rank-item">

<a href="/python-378450.html" title='python的选择语句是什么语句' class="item-name ellipsis" target="_blank">

<span class="g-art-count fr">374次</span>

<span class="g-sort-num ">14</span>

python的选择语句是什么语句 </a>

</li> <li class="rank-item">

<a href="/python-362375.html" title='Python中构造方法的解析(附示例)' class="item-name ellipsis" target="_blank">

<span class="g-art-count fr">374次</span>

<span class="g-sort-num ">15</span>

Python中构造方法的解析(附示例) </a>

</li> <li class="rank-item">

<a href="/python-360729.html" title='关于python中引入导入与自定义模块以及外部文件的实例分享' class="item-name ellipsis" target="_blank">

<span class="g-art-count fr">373次</span>

<span class="g-sort-num ">16</span>

关于python中引入导入与自定义模块以及外部文件的实例分享 </a>

</li> <li class="rank-item">

<a href="/python-364421.html" title='python如何在不同类之间调用方法' class="item-name ellipsis" target="_blank">

<span class="g-art-count fr">372次</span>

<span class="g-sort-num ">17</span>

python如何在不同类之间调用方法 </a>

</li> <li class="rank-item">

<a href="/python-462395.html" title='python中的【//】是什么运算符号' class="item-name ellipsis" target="_blank">

<span class="g-art-count fr">372次</span>

<span class="g-sort-num ">18</span>

python中的【//】是什么运算符号 </a>

</li> <li class="rank-item">

<a href="/python-363743.html" title='python中╲t是什么' class="item-name ellipsis" target="_blank">

<span class="g-art-count fr">371次</span>

<span class="g-sort-num ">19</span>

python中╲t是什么 </a>

</li> <li class="rank-item">

<a href="/python-357501.html" title='python同时给多个变量赋值' class="item-name ellipsis" target="_blank">

<span class="g-art-count fr">371次</span>

<span class="g-sort-num ">20</span>

python同时给多个变量赋值 </a>

</li>

</ul>

</div>

</div>

</div>

<!-- / 教程内容页 -->

</div>

</div>

<!-- 页尾 -->

<div class="footer">

本站所有资源全部来源于网络,若本站发布的内容侵害到您的隐私或者利益,请联系我们删除!</div>

<!-- / 页尾 -->

<script type="text/javascript" src="/kan/js/read.js"></script>

<div style="display:none">

<div class="login-box" id="login-dialog">

<div class="login-top"><a class="current" rel="nofollow" id="login1" onclick="setTab('login',1,2);" >登录</a></div>

<div class="login-form" id="nav-signin">

<!-- <div class="login-ico"><a rel="nofollow" class="qq" id="qqlogin" target="_blank" href="/user-center-qqlogin.html"> QQ </a></div> -->

<div class="login-box-form" id="con_login_1">

<form id="loginform" action="/user-center-login.html" method="post" onsubmit="return false;">

<p class="int-text">

<input class="email" id="username" name="username" type="text" value="用户名或Email" onfocus="if(this.value=='用户名或Email'){this.value='';}" onblur="if(this.value==''){this.value='用户名或Email';};" ></p>

<p class="int-text">

<input class="password1" type="password" id="password" name="password" value="******" onBlur="if(this.value=='') this.value='******';" onFocus="if(this.value=='******') this.value='';" >

</p>

<p class="int-info">

<label class="ui-label"> </label>

<label for="agreement" class="ui-label-checkbox">

<input type="checkbox" value="" name="cookietime" id="cookietime" checked="checked" value="2592000">

<input type="hidden" name="notforward" id="notforward" value="1">

<input type="hidden" name="dosubmit" id="dosubmit" value="1">记住我的登录 </label>

<a rel="nofollow" class="aright" href="/user-center-forgetpwd.html" target="_blank"> 忘记密码? </a></p>

<p class="int-btn"><a rel="nofollow" id="loginbt" class="loginbtn"><span>登录</span></a></p>

</form>

</div>

<form id="regform" action="/user-center-reg.html" method="post">

<div class="login-reg" style="display: none;" id="con_login_2">

<input type="hidden" name="t" id="t"/>

<p class="int-text">

<input id="email" name="email" type="text" value="Email" onfocus="if(this.value=='Email'){this.value='';}" onblur="if(this.value==''){this.value='Email';};"></p>

<p class="int-text">

<input id="uname" name="username" type="text" value="用户名或昵称" onfocus="if(this.value=='用户名或昵称'){this.value='';}" onblur="if(this.value==''){this.value='用户名或昵称';};"></p>

<p class="int-text">

<input type="password" id="pwd" name="password" value="******" onBlur="if(this.value=='') this.value='******';" onFocus="if(this.value=='******') this.value='';"> </p>

<p class="int-text1"><span class="inputbox">

<input id="validate" name="validate" type="text" value="验证码" onfocus="if(this.value=='验证码'){this.value='';}" onblur="if(this.value==''){this.value='验证码';};">

</span><span class="yzm-img"><img src="/user-checkcode-index" alt="看不清楚换一张" id="indexlogin"></p>

<p class="int-info">

<label>

<input value="" name="agreement" id="agreement" CHECKED="checked" type="checkbox">

我已阅读<a rel="nofollow" href="/user-center-agreement.html">用户协议</a>及<a rel="nofollow" href="/user-center-agreement.html">版权声明</a></label>

</p>

<p class="int-btn"><input type="hidden" name="dosubmit"/>

<a rel="nofollow" class="loginbtn" id="register"><span>注册</span></a></p>

</div>

</form>

</div>

</div>

</div>

</div>

<script type="text/javascript" src="/kan/js/foot_js.js"></script>

<script>

var _hmt = _hmt || [];

(function() {

var hm = document.createElement("script");

hm.src = "https://hm.baidu.com/hm.js?6dc1c3c5281cf70f49bc0bc860ec24f2";

var s = document.getElementsByTagName("script")[0];

s.parentNode.insertBefore(hm, s);

})();

</script>

<script type="text/javascript" src="/layui/layui.js"></script>

<script>

layui.use('code', function() {

layui.code({

elem: 'pre', //默认值为.layui-code

about: false,

skin: 'notepad',

title: 'php怎么实现数据库验证跳转代码块',

encode: true //是否转义html标签。默认不开启

});

});

</script>

</body>

</html>